How to estimate the cost of a bug?

Compiling a list of the costliest software bugs in history is a tricky task. The final selection largely depends on how these expenses are calculated and which events are considered to be direct consequences of the bug.

When it comes to cost estimation of software bugs, you can count in cost items such as:

- repair and recovery expenses

- product returns and refunds

- costs of operational disruptions

- delayed product release

- regulatory fines

- legal settlements

- lost sales

- customer churn

- customer support expenses

- stock price drop

- a range of possible consequences of reputational damage

But agreeing on exactly which of these should be taken into account in each particular case is virtually impossible. Moreover, some companies choose not to disclose any official numbers at all, and let’s not even get started on the complexities of inflation adjustments.

Take the recent Meta outage, for example. In 10 hours, it’s estimated that the company has lost $60-$100 million in advertising revenue, but if you count in the 1,5% market value drop, the total damage can be calculated as being as high as $3 billion!

We tried using the most credible and reasonable estimates of costs directly linked to software bugs, but because of the mentioned methodological difficulties, the top 10 selection always remains somewhat subjective and loose. In any event, we picked 10 monumental failures that would surely make most top 10 lists thanks to the massive costs they generated. In order to avoid further complications, we’ll present them in chronological order, rather than ranking them.

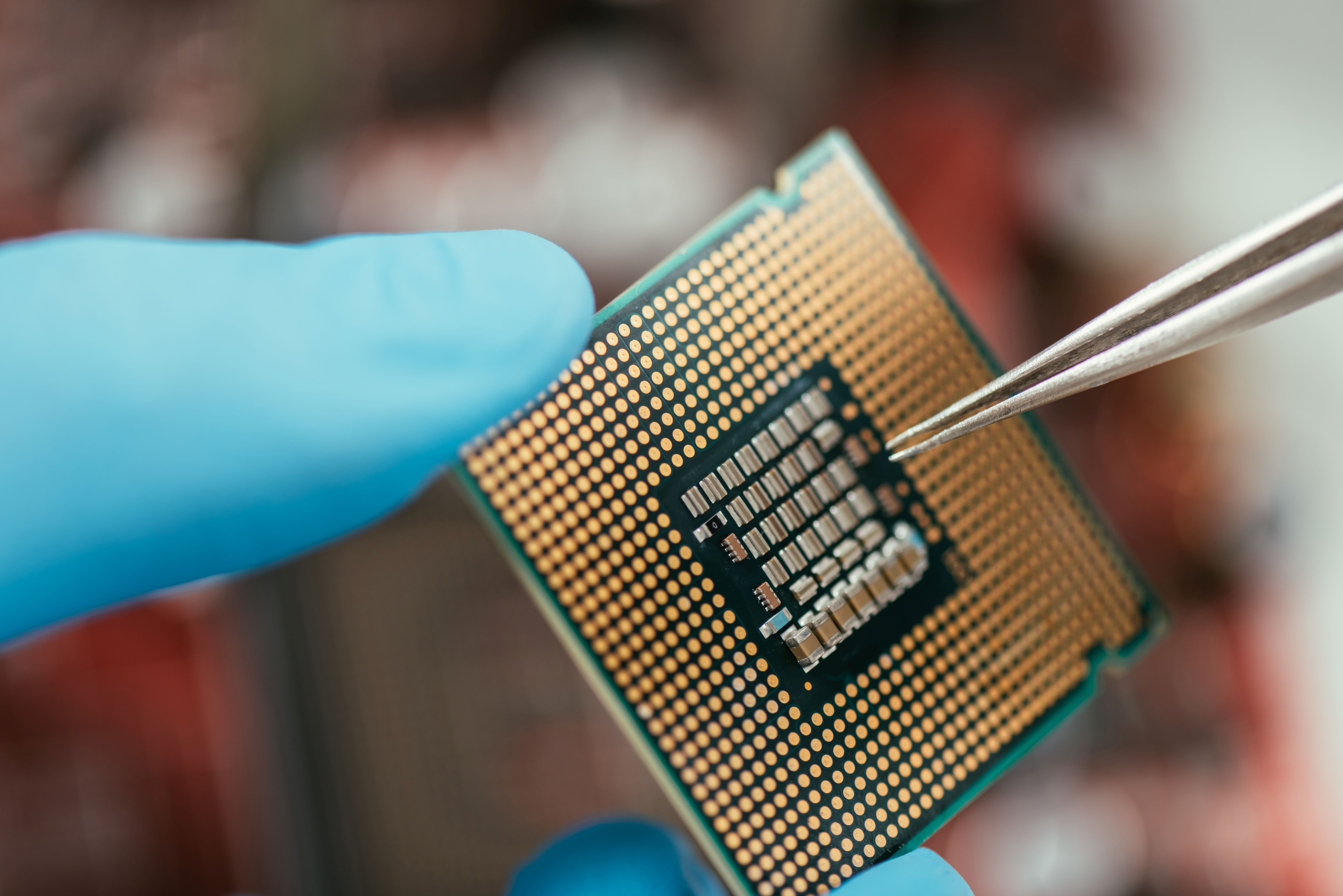

1. Intel’s Pentium processor FDIV error (1994)

The Pentium FDIV bug was a flaw in the Intel Pentium processor's floating-point division (FDIV) operation, discovered in 1994. This bug caused incorrect decimal results for a small set of division operations, which could lead to errors in calculations under specific conditions.

The error could lead to results that were incorrect in the fourth to the ninth decimal place, and it would only produce inaccurate results under an extremely rare condition of the divisor or dividend having a very specific pattern of bits. Intel announced that an average spreadsheet user might encounter the bug once every 27,000 years, and that it will likely have no impact whatsoever on the experience of most users.

Hence, Intel initially tried to downplay the importance of the bug. However, this error could severely affect scientific research, engineering calculations, or financial computations where exact numbers are crucial, and small differences can accumulate significantly over time, creating big problems. So, after substantial public pressure and media scrutiny, Intel decided to offer a no-questions-asked replacement for those who felt their processor's performance was compromised by the bug. It is estimated that the total cost of replacing these processors amounted to around $475 million.

2. Mars Climate Orbiter unit conversion issue (1998-1999)

In a number of industries, a seemingly tiny and trivial oversight can produce dramatic consequences. The aerospace sector is a great example, and we’ve seen multiple instances throughout history where small issues resulted in major disasters. We’ll look into one of the most notable examples – the malfunction of the Mars Climate Orbiter.

The Mars Climate Orbiter was part of NASA's Mars Surveyor '98 program, with the primary mission of studying Mars' atmosphere and climate. However, the spacecraft was lost upon its arrival at Mars on September 23, 1999, due to a catastrophic navigation error.

The failure was traced back to a critical software interface between the ground-based navigation team and the spacecraft's thruster control system. The navigation team used metric units (newtons) for its calculations, as is standard in space missions. In contrast, the contractor responsible for developing the orbiter's thruster control system used imperial units (pounds-force) in their software. This discrepancy was not caught during the development, testing, or operational phases of the mission.

As the spacecraft made its 286-day journey to Mars, small errors in its trajectory were corrected through thruster firings. Due to the unit mismatch, these corrections were off by a factor of 4.45, causing the spacecraft to gradually deviate from its intended path. By the time it reached Mars, the cumulative effect of these incorrect adjustments placed it on a dangerous trajectory. The orbiter descended to an altitude roughly 100 kilometers below its target near Mars, where it met with denser atmospheric conditions, causing it to be destroyed or thrown out of Mars' orbit.

This seemingly trivial oversight led to the complete collapse of a mission valued at over $320 million.

3. Global struggle to prevent the Y2K bug (19?? - 2000)

The anticipation of the year 2000 was accompanied by a mix of fear, skepticism, and a massive mobilization of resources to avert potential disasters. Media coverage often focused on worst-case scenarios, such as planes falling from the sky, stock markets collapsing, and overall doomsday predictions. Some people even stocked up food, water, and weapons for the upcoming apocalypse.

It all started with a simple trick to save some memory, which was very expensive back in the early days of modern computers. So, saving a couple of bits by representing years in a two-digit format (writing “67” instead of “1967”) sounded like a good idea until the year 2000 closed in. Experts realized that if computer systems interpret “00” as “1900” and not “2000”, absolute chaos could ensue. Our reliance on automated systems in nearly all sectors of life meant that numerous pieces of critical infrastructure, from banking software calculating mortgages to control systems for nuclear reactors, could potentially fail and possibly trigger an unprecedented chain reaction event.

This was by far the most expensive bug in human history, costing anywhere from $300 to $600 billion worldwide. The vast majority of this money was not spent on fixing the consequences of failures, but on preemptive steps to prevent them. Governments and businesses undertook extensive efforts to review and upgrade their computer systems. This included auditing countless lines of code, replacing or upgrading hardware and software systems, testing, implementation, consulting fees, contingency planning, and other related activities.

However, the cost seems to have been worth it since the world is still spinning, and the 300-600 billion dollars spent prevented potentially much larger expenses. Admittedly, there were criticisms post-2000 regarding whether the threat was exaggerated and if the massive expenditures were justified, and we’ll probably never know for sure if these concerns are valid. But the list of problems the Y2K bug caused despite all the protective efforts isn’t particularly reassuring and underscores the critical importance of strategic planning and long-term vision in software development.

4. Toyota’s unintended acceleration problem (2009-2011)

The unintended acceleration affair stirred great controversies and proved just how difficult it is sometimes to detect what exactly causes the unwanted behavior of any system. From 2009 to 2011, there was a series of complaints, which quickly turned into legal actions, regarding certain vehicles suddenly accelerating beyond the driver's control. The issue led to a large number of accidents, some fatal, and resulted in significant public safety concerns.

Toyota initially attributed the problem to several potential causes, focusing largely on apparent issues with floor mats and accelerator pedals. They addressed the issues through recalls for mechanical fixes on mats and pedals and by installing a brake override system in affected vehicles. As a result, the number of incidents reportedly decreased, but there were still numerous claims that the problem persisted, suggesting that not all factors causing the issue have been resolved.

Several investigations were conducted by external experts and agencies, but they did not find electronic faults that could lead to unintended acceleration. However, as investigations deepened, attention turned toward the vehicles' electronic throttle control system (ETCS). Critics and some independent researchers showed that software glitches or electromagnetic interference within the vehicle's electronic systems could, in fact, cause unintended acceleration.

In the following years, Toyota fought a tough legal battle to prove they were not at fault, but after numerous inconsistencies were discovered, they agreed to a $1.2 billion legal settlement with the U.S. Department of Justice to avoid prosecution for covering up safety problems. The recall of 8 million vehicles additionally cost them around $2 billion, and a class-action lawsuit in 2013 led to another $1.1 billion settlement. It’s hard to estimate the total cost of this debacle for the Japanese car manufacturer, but it definitely lies north of $5 billion.

5. A 45-minute meltdown at Knight Capital Group (2012)

Knight Capital Group was a financial services firm engaged in market making and trading, known for using complex algorithms and high-frequency trading strategies to facilitate transactions and provide liquidity in the financial markets. On the morning of August 1, 2012, they experienced a catastrophic trading issue due to a software glitch that led to uncontrolled purchase of stocks.

The event was triggered by the company's attempt to respond quickly to new requirements from the New York Stock Exchange's Retail Liquidity Program (RLP), which was designed to provide better price executions for retail investors.

Knight Capital was implementing a software update to participate in the RLP, but new code unexpectedly activated the now outdated, incompatible piece of code (so-called “dead” code) that actually served as a test mechanism until 2003. Its role was to buy high and sell low to verify the behavior of trading algorithms in a controlled environment.

The chaos began when a technician failed to properly deploy the new software to all of the firm's eight servers; only seven received the update. As a result, the eighth server was now running the outdated code, and began sending erroneous orders to buy stocks at inflated prices. These orders were not checked or balanced by the new system, leading to massive unintended positions in various stocks.

Although this involuntary buying spree lasted for a mere 45 minutes, the aftermath was disastrous for Knight Capital. The firm incurred a total loss of $440 million from these unwanted positions, a figure that threatened its solvency. To rescue the situation, a consortium of investors stepped in to provide $400 million in funding in exchange for equity in the firm. Despite these efforts, the financial strain and damaged reputation led to Knight Capital merging with Getco, forming a new entity known as KCG Holdings in 2013.

Wondering whether the Knight Capital Group catastrophe was avoidable? It absolutely was - the right testing practices make all the difference. Contact us to find out how our testing solutions can optimize your software quality and reliably eliminate critical business risks.

In part 2 of this article, we’ll reveal the rest of the entries from our list. To read more about the most expensive software failures ever recorded, stay tuned!